Embedding an Evolutionary Principles for Transformation

The Transformation journey shouldn’t end with architecture diagrams or deployed services. It needs to grow into a living, breathing system, one that thrives on ongoing continuous improvement. To make that happen, we need to build a framework that consistently:

- Checks for alignment with business goals

- Detects system health issues early

- Supports small, safe, and iterative changes

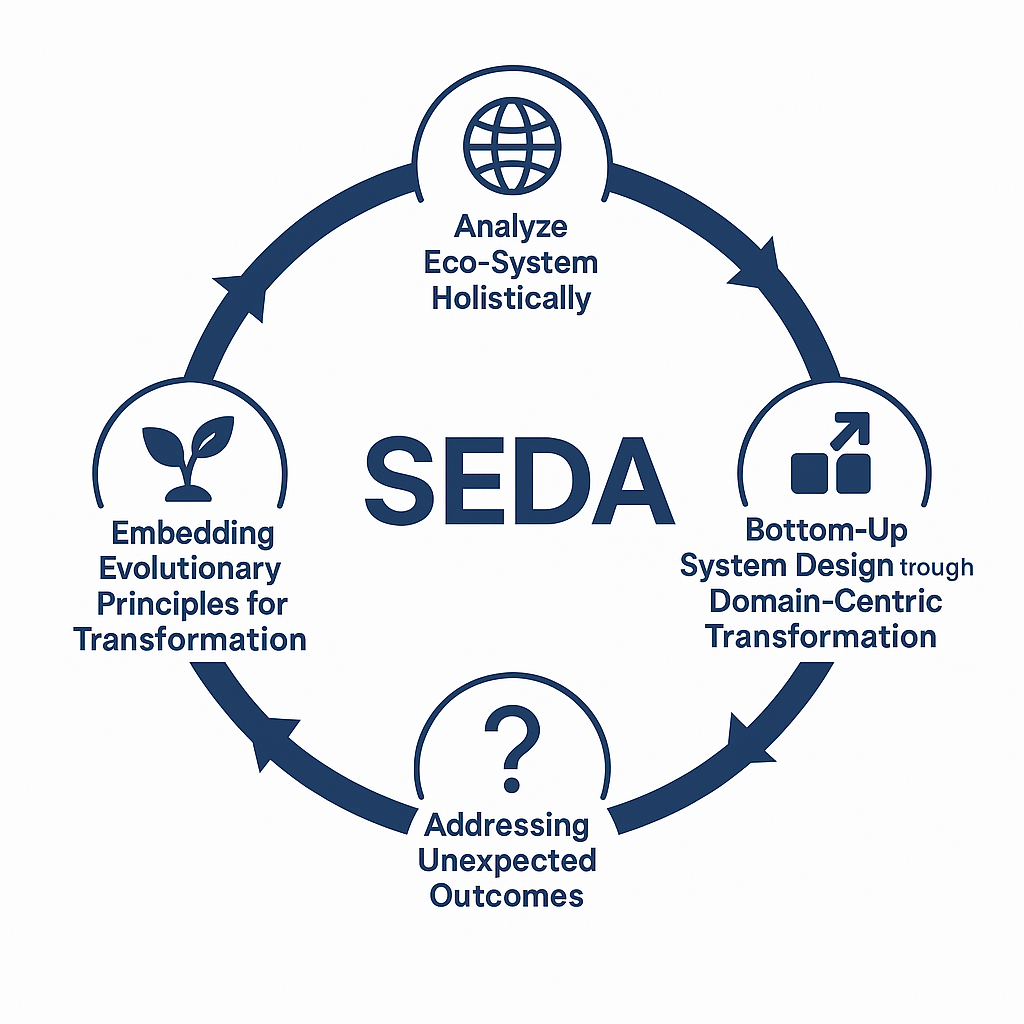

In Part One mentioned that SEDA framework consists of four steps, each of which was briefly discussed. The fourth step (last step), Embedding Evolutionary Principles for Transformation, is what we’ll explore in detail in this article.

Global System Alignment With Architectural Fitness Functions

A fitness function is a measurable, automated, and context-specific rule that helps determine how well a software system is meeting its technical and business goals.

In the context of software transformation, especially within SEDA, it acts like a real-time checkpoint, continuously verifying that the evolving system stays within acceptable behavioral and structural limits.

An architectural fitness function is any mechanism that provides an objective integrity assessment of some architectural characteristic(s).1

The goals of using fitness functions in SEDA include:

- Preventing architectural or domain drift after transformation

- Defining what good looks like for each subsystem

- Avoiding regressions in system design or behavior

Neal Ford and his colleagues identified fitness functions2 and SEDA uses them as the foundation of the 4th phase, known as the evolutionary phase.

Scope-Based Functions

A Scope-Based Fitness Function in software testing refers to an approach where the testing is clearly guided by the defined boundaries of the software components or features being examined. This kind pf fitness function ensures that testing stays focused on specific requirements or modules, helping avoid unnecessary effort on areas that fall outside the intended scope. This type of fitness functions are categorized as below.

Atomic Fitness Function

An Atomic Fitness Function evaluates each part of a system on its own. It looks at specific features or components in isolation, using detailed metrics to measure how well each individual piece performs. Unit testing is the example of such functions.

Holistic Fitness Function

A Holistic Fitness Function, on the other hand, looks at the system as a whole. It takes into account how all the parts work together and evaluates overall performance, offering a complete picture of how well the solution functions.

Cadence-Based Functions

Cadence-Based Fitness Functions in software testing are evaluation methods that track the health, performance, or quality of software components over time. They focus on the regularity or rhythm of testing and updates to ensure consistent improvement and stability. These fitness functions can be grouped into three main categories, as outlined below.

Triggered Fitness Function

The Triggered Fitness Function runs automatically in response to specific events, like a code commit or a failed test, providing immediate, reactive feedback. This helps catch and address issues at critical stages in the development process.

Continual Fitness Function

A Continual Fitness Function runs continuously or at regular intervals, independent of specific events. It provides ongoing insight into system stability and performance, making it valuable for early detection of regressions or performance degradation.

Temporal Fitness Function

A Temporal Fitness Function evaluates software performance based on its behavior or metrics over a specific time period (e.g., uptime over 30 days). It’s particularly useful for assessing long-term reliability, consistency, and usage patterns.

Result-Based Functions

Result-Based Fitness Functions in software testing are used to evaluate how well software performs by looking at the outcomes it produces during testing. Instead of focusing on internal processes, these functions assess the correctness, reliability, and completeness of the results to determine whether the software meets its intended goals. These fitness functions can be grouped into two main categories, as outlined below.

Static Fitness Function

Static Fitness Functions evaluate test results against predefined criteria or expected outcomes, without taking runtime conditions into account. They are useful for validating logic, checking true/false results, ensuring correctness, and confirming compliance with known requirements.

Dynamic Fitness Function

Dynamic Fitness Functions evaluate test results by observing runtime behavior and adapting to changing conditions during execution. They’re ideal for assessing performance, scalability, and real-time decision-making in response to varying inputs.

Proactivity-Based Functions

Proactivity-Based Fitness Functions in software testing measure how well a system can anticipate and adapt to future needs or conditions, rather than just reacting to what’s happening now. These functions assess how actively the system works to improve its own performance, robustness, or efficiency. As it is explained in Building Evolutionary Architecture: Automated Software Governance, it could be categorized in two groups.

Intentional Fitness Function

A fitness function designed with specific, well-defined goals or desired outcomes in mind. It guides the system to take deliberate, proactive actions that align with strategic objectives, such as self-optimization or predictive maintenance.

Emergent Fitness Function

Emergent Fitness Function arise naturally from a system’s interactions and behaviors, rather than being directly programmed. They reflect adaptive intelligence and are especially useful in complex or constantly changing environments, where outcomes can’t always be predicted in advance.

Continuous Integration & Delivery (CI/CD)

CI/CD is often seen simply as a tool for automation, but in the context of Software Transformation, it plays a far more meaningful role. It helps ensure alignment with key architectural principles, like domain isolation. It supports safe and observable change, and it evolves into a powerful mechanism for governance, continuous learning, and organizational agility.

Architectural Validator

Every merge and deployment is a chance to test whether our system design and transformation policies are working as intended. In the context of software transformation, CI/CD pipelines are more than just automation tools. They become powerful enforcers of architectural integrity and domain discipline.

One powerful practice is to gate builds when changes cross bounded contexts. This helps ensure teams don’t unintentionally couple domains without making a deliberate architectural decision. It acts as a safeguard against architectural drift and supporting decentralized, stream-aligned team autonomy.

By embedding fitness functions directly into the CI/CD pipeline, the process evolves from simple technical validation to enforcing strategic alignment; ensuring that any changes deployed meet both system health standards and business goals before reaching production.

Agent of Feedback Loops

As described in Part 4 of SEDA, transformation relies heavily on rapid feedback. CI/CD turns every code change into a learning opportunity. For example: What breaks? What slows down? Who gets blocked?

Every deployment should serve as a window into how the system behaves, not just from a technical perspective, but strategically as well. By embedding domain-specific metrics, such as checkout latency or on-boarding conversion rates, directly into CI/CD, the pipeline evolves into more than just a delivery tool. These checks help ensure the system behaves according to its Domain-Driven Design, making sure that critical flows aren’t accidentally broken without being caught before deployment.

Beyond just blocking regressions, this feedback must flow into the organization’s learning cycles. Pipeline metrics should inform retrospectives, shape team priorities, and evolve fitness functions to reflect emerging system realities.

Business Alignment Enabler

CI/CD should focus on delivering continuous business value and not just shipping features. With Systemic Event Discovery Approach (SEDA), business intent is built into the pipeline, ensuring every release aligns with broader goals. Connecting commits to business goals or OKRs ensures that every line of code serves a clear purpose, not just adding output for output’s sake. This alignment helps scale transformation with intention, making it easier to trace delivery back to real value.

Our goal is to help organizations approach transformation as a practical, evidence-based journey. Feature toggles support this by enabling safe, real-world experimentation. So teams can test business ideas with actual users without compromising system stability. When combined it with CI/CD, they support an iterative, feedback-driven approach to transformation.

Post-Deployment Monitoring

In the context of software transformation, Post-Deployment Monitoring acts as a safety net right after new changes go live. Its main goal is to ensure that everything is working as expected, both technically and from a user experience standpoint, without introducing bugs, regressions, or crossing domain boundaries.

This phase involves short-term observation, keeping a close eye on logs, latency, error rates, and overall service availability.

On the other hand, real-time monitoring of business metrics, which are described in Part 4, serves a more strategic and ongoing purpose. It focuses on tracking high-level performance indicators, like conversion rates, transaction volumes, or user retention, independent of any specific deployment.

In a successfully transformed system,where components are decoupled and changes happen frequently, the monitoring layer plays an important role. It helps catch regressions, unexpected behaviors, or contract violations early, before they spread across domains or affect users.

Domain Fitness Check

To set up effective Post-Deployment Monitoring, begin by defining clear expectations for each domain or bounded context. SEDA suggests, as Domain Fitness Check, to clarify what success looks like, what types of failures need to be detected, and which metrics indicate the system is running smoothly.

Use monitoring tools like Prometheus, Grafana, or Datadog to keep an eye on key metrics such as request latency, error rates, database response times, and communication between services.

Semantic & Behavioral Check

In SEDA, in addition to Domain Fitness Check, it’s important to include Semantic & Behavioral Check. For example, verify that a customer profile update is correctly propagated to downstream systems, or that new events are published in the expected format. Use automated health probes, synthetic transactions, and end-to-end assertions to simulate real user interactions and confirm that everything works correctly after deployment.

Wrapping Up

Embedding an Evolutionary Principles for Transformation marks a shift into the operational maturity stage of software transformation, where bold architectural visions give way to steady, deliberate progress. Instead of relying on risky, large-scale rollouts, this phase focuses on controlled, feedback-driven improvements inside each domain.

It encourages organizations to adopt an evolutionary mindset, treating every system change as an opportunity to strengthen business alignment, maintain domain integrity, and improve overall system health.

These practices reflect a core truth about Sustainable Transformation – it’s never truly finished. it’s always evolving.

This approach is built on three key practices: Fitness Functions, CI/CD, and Post-Deployment Monitoring.

Fitness functions serve as programmable guardrails, constantly checking that the system stays aligned with both business goals and technical constraints.

CI/CD pipelines aren’t just about faster delivery. They become strategic tools for reinforcing architecture, tightening feedback loops, and ensuring that each change meets domain-specific expectations.

Post-deployment monitoring completes the cycle. It confirms that changes perform safely and effectively in the real world, supporting the transformation’s goals through real-time insights and the ability to adapt quickly.

- Building Evolutionary Architecture: Automated Software Governance, Neal Ford, Rebecca Pearson, Patrick Kua, Pramod Sadalage ↩︎

- Building Evolutionary Architecture: Automated Software Governance, Neal Ford, Rebecca Pearson, Patrick Kua, Pramod Sadalage ↩︎